So for a fair bit now, I have been trying to loosely piece together a way of adjusting the overall contrast and white balance of a film negative scan (altering the scan's DMax to reflect something based off of the stock's Status M graph) before doing the traditional printer offset styled color grading and then running it through a PFE to get the final image.

I understand this all isn't really possible to distill into an exact science given how wobbly photochemical work is and all that, but when I am given scans that have wildly incorrect levels and balances, I'd like to try and ballpark them as close as I can to get something consistent.

However, recently I came across a peculiar quandary regarding this process, which is that apparently depending on how a negative is to printed, the expected input printing density is altered to reflect this output.

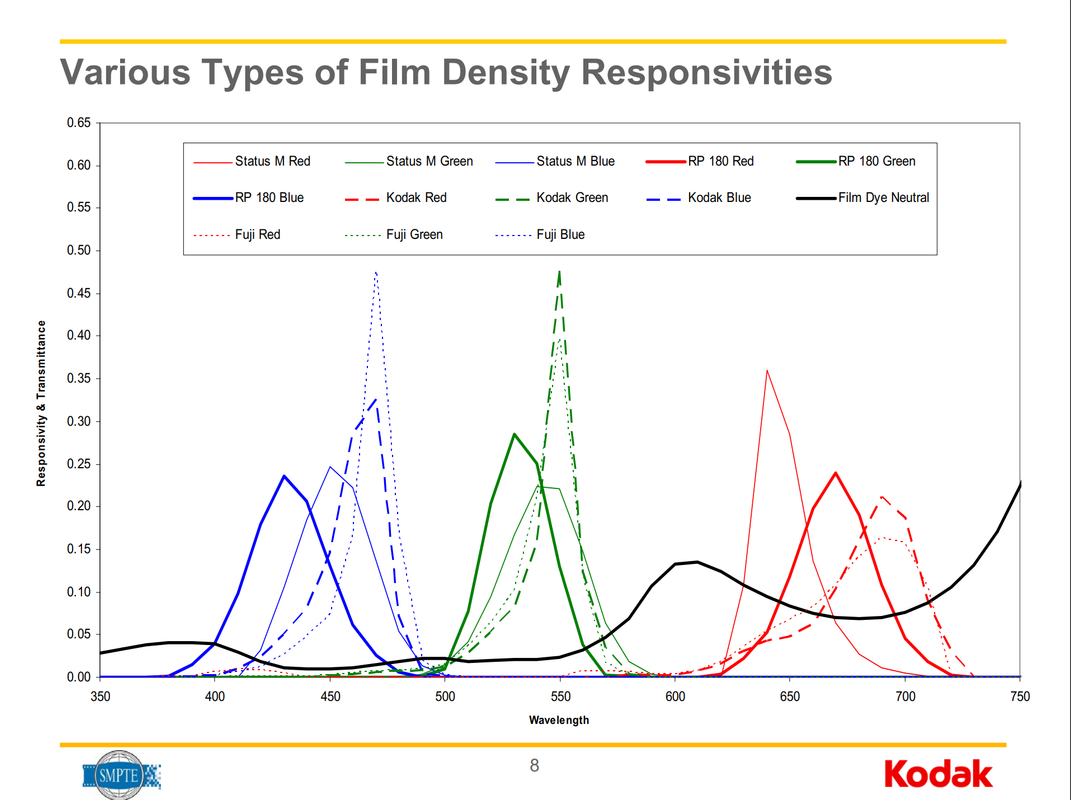

Between the relatively standardized SMPTE RP 180 printing density and ACES' ADX / APD, Kodak apparently averaged in the past what printing density is expected for printing on their stock, as well as what is to be expected when printing to Fuji.

So unless I am horribly misunderstanding how this process works, my main question is what sort of density input is expected for the PFEs that are built into ColourSpace?

According to the manual stuff floating on the LightIllusion site, it just vaguely describes that it expects a Cineon input, and for the Film Profiling services, it makes note of both SMPTE RP 180 and APD. But does that mean that the input LOG scan should be altered to reflect what that particular stock expects (Kodak for Vision & Premiere, Fuji for Fuji), or does the LUT expect the standard SMPTE RP 180 or ADX / APD measurement and automatically alters the input?

Alternatively, is there any sort of rule of thumb that could be derived from a film stock's Status M measurements when working with a scan that is completely off the mark?

Thank you in advance!